GitLab on a DiskStation

Sometimes, regardless of the possibilities offered by “the cloud”, you want to host important services yourself. For me as a software and DevOp engineer, this applies to my source code. For this reason, I host my GitLab instance myself. Since the GitLab package for DSM provided by Synology is outdated, I will explain here how to install the latest version of GitLab on a DiskStation using Docker.

Preparations

Before we can install GitLab on a DiskStation, we need to make some preparations:

- Install the Docker package from the Synology Package Center.

- Create a Shared Folder for GitLab. For this article, I assume that the Shared Folder is named gitlab and is created on the first volume, so its path on the filesystem will be /volume1/gitlab.

- Activate DSM SSH access. You can also activate Public Key Authentication for DiskStation SSH access following this tutorial.

Setup GitLab on a DiskStation

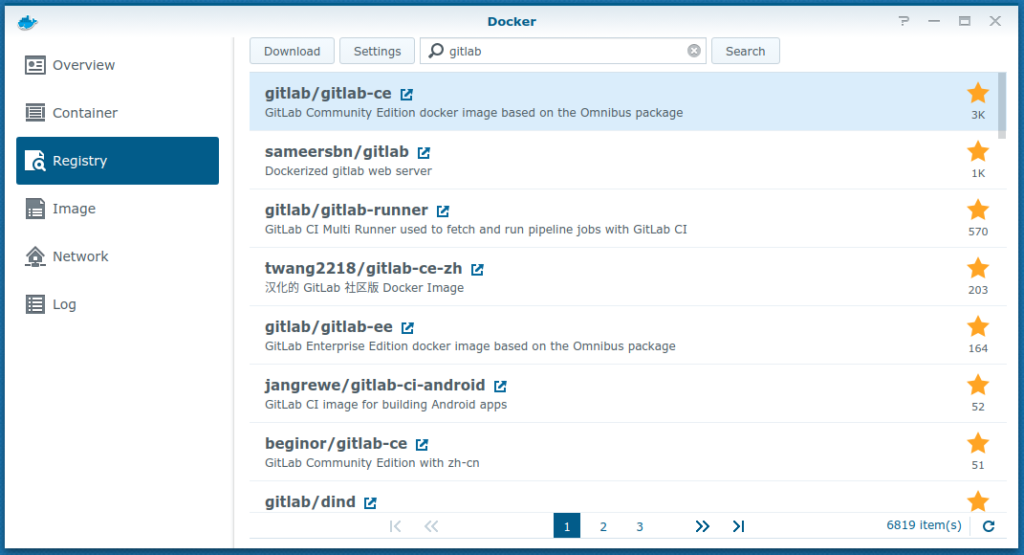

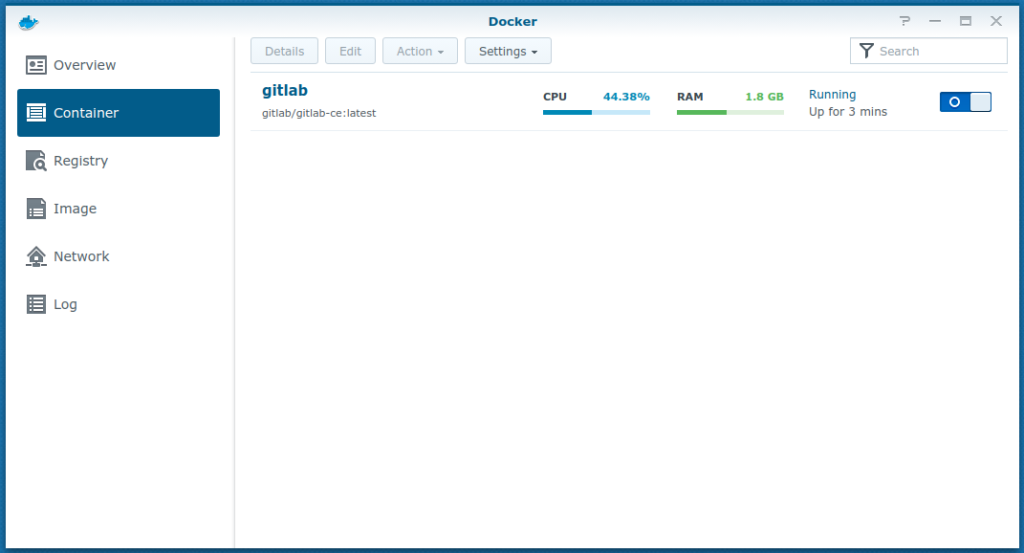

Now we are going to install GitLab. To do so, we need to download the latest docker image of GitLab first. Open the Docker App in DSM, select Registry in the left menu and download the latest gitlab/gitlab-ce image.

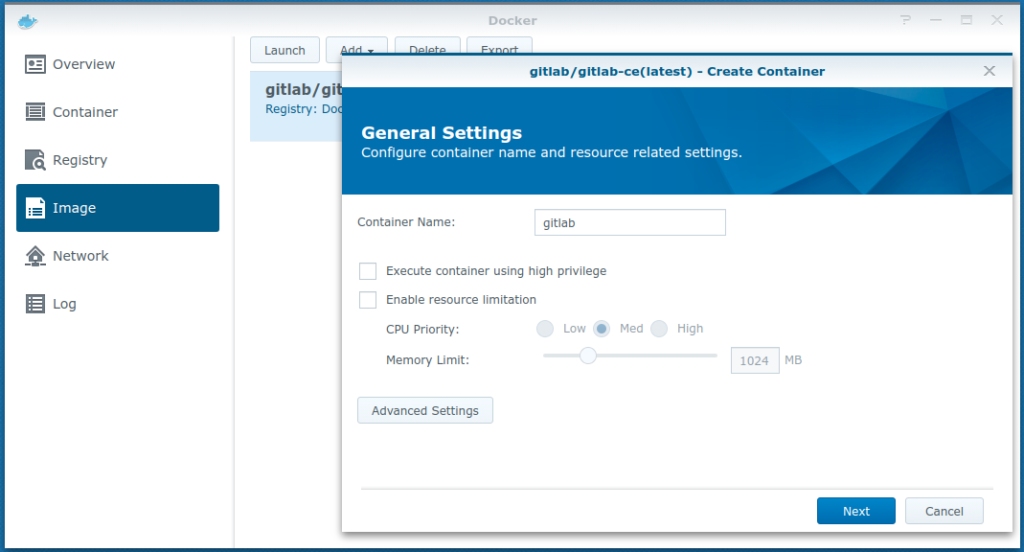

You can watch the download progress in the menu on the left in Image. As soon as the download is complete, a new container can be created with Launch in the upper left corner.

Before we click on Next, we have to do some configuration in the Advanced Settings dialog.

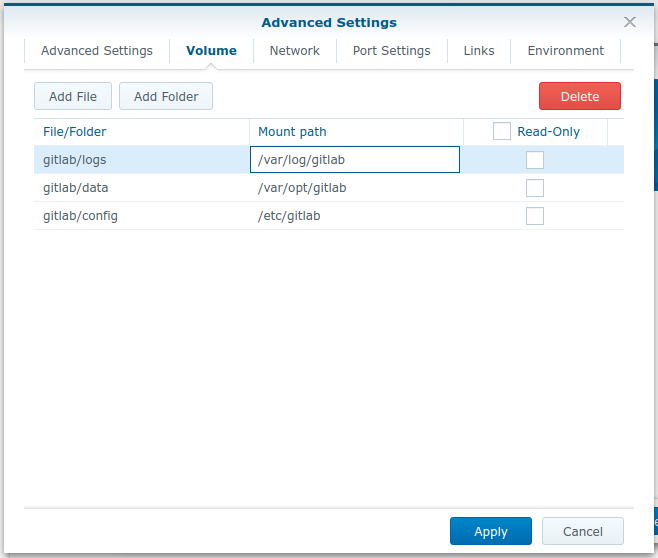

Volumes

We need to persist three directories of GitLab:

- /etc/gitlab – configuration directory

- /var/opt/gitlab – user-generated content (repositories, database, …)

- /var/log/gitlab – logfiles

We do this by defining directory volumes. This is done by mapping subdirectories of the previously created shared directory into the container by defining a mount path.

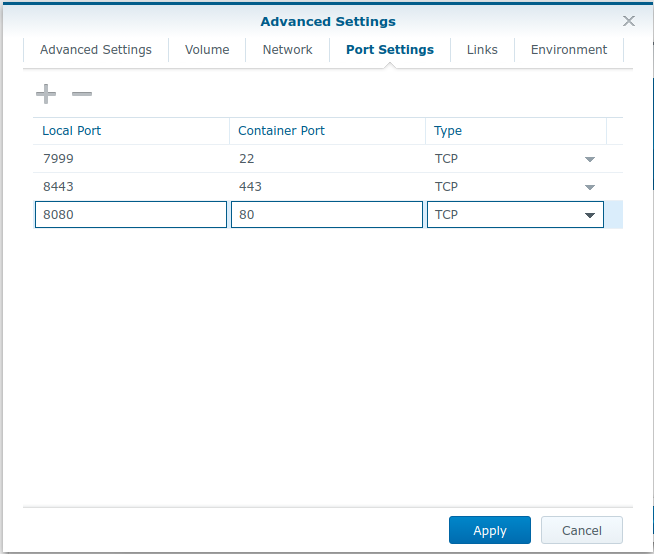

Port Settings

To allow access to the GitLab instance from outside, we need to define port bindings. These port bindings will forward DiskStation host ports to the GitLab Docker container.

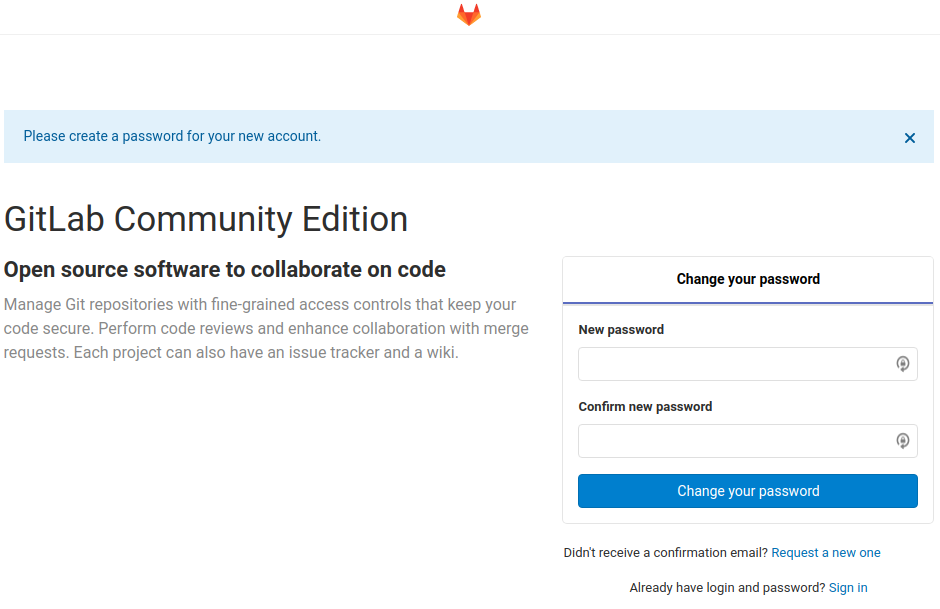

After applying all changes and starting the container, it will take a couple of minutes for GitLab to bootstrap the instance. After a while, you should be able to access your new GitLab instance at http://<NAS IP>:8080.

On this screen, you can set the password for the root user of GitLab.

Final Configuration

Before your GitLab instance is ready for usage, we have to finalize the configuration. Use the DSM Docker application for shutting down the container.

When the container is off, connect to your DiskStation via SSH. Open the GitLab configuration file /volume1/gitlab/config/gitlab.rb and adjust (at least) the following configuration:

- external_url – Set to the URL you want to use for accessing GitLab.

- gitlab_rails[‘gitlab_shell_ssh_port’] = 7999 – Use port 7999 for Git via SSH, since port 22 is already taken by your DiskStation.

Further Considerations

External Access

You have to decide how to configure external access to your GitLab instance. The simplest option might be to configure a port forwarding from your router to the GitLab ports on your DiskStation. Another possibility is to use DSM’s reverse proxy.

HTTPS Encryption

Both, DSM and GitLab have built-in support for requesting LetsEncrypt certificates for HTTPS. If you’re not using DSM’s reverse proxy, you should configure LetsEncrypt in your gitlab.rb configuration.

If you’re using the DSM reverse proxy, you can still use GitLab’s capabilities for getting LetsEncrypt certificates or configure HTTPS offloading (the reverse proxy terminates the HTTPS connection and forwards requests internally using HTTP) and use DSM’s capabilities for getting LetsEncrypt certificates. When configuring the hosts, please be sure to enable Websocket Support.

Automatic Updates

I published some code on GitLab which can help automate updates. Freel free to use and report back success stories or problems.

The End

After you finished configuration, use the DSM Docker GUI to start your GitLab container again.

Congratulation! Your GitLab on a DiskStation is now up and running!

For questions, don’t hesitate to leave a comment below. For personal support, you can also contact me directly.

71 COMMENTS

Cool, just planning the same thing, however want to know a bit more on how to integrate a docker registry (this is now supported in the latest gitlab-ce just by enabling a package, I read…) . Any experiences on that? and the runners?

Thx

Using GitLab’s Docker Registry is quite simple and straight forward. Just enable it in your GitLab settings. The only thing you have to care about is the additional hostname (one for GitLab, one for the registry) in your Reverse Proxy if you’re using one.

For the runners, there are two ways to go: If you have enough RAM, you could install a Virtual Machine on your DiskStation where the runner resides (and there again with Docker or maybe even better with Kubernetes). Alternatively, buy a Virtual Machine from a Cloud Provider (e.g. Google Cloud, AWS, Hetzner Cloud or Netcup (Affliate Link), …). I would strongly suggest not to use the Docker Daemon of your NAS. I’m even not sure if it’s possible at all.

Best regards

Matthias

Thx Matthias, much appreciated!

I found this tutorial useful, thank you.

I am just dabbling for the first time with self-hosted GitLab having used GitHub for a while now. I want to learn about CI/CD and rather than get dragged into paying for minutes etc figured I’d learn how to self-host.

My NAS is a 2013 DS1513+ which means it has only 2GB ram. Unsurprisingly it is painfully slow as a GitLab server so I am considering upgrading to a more recent model such as the 1018+ or the 1618+.

I like the idea of my NAS being my GitLab server too, it seems an efficient use of space and tech. It also means I can easily integrate backing up of GitLab into my existing backups to Synology C2.

However, before I upgrade, I am trying to establish what sort of performance I would get with GitLab. I assume that the main reason for it being slow on my 1513+ is that 2GB is way below the recommended 8GB, so if I get, say, a 1618+ and fitted it out conservatively with 16GB can I expect fast browsing fo the GitLab site and efficient pushing and pulling or would I find I become limited by the CPU? Can I get it close to a dedicated Ubuntu server in terms of performance or am I always going to find it sluggish due to running in a container on a Synology NAS?

Hey,

glad that it helped.

First: You know that you can upgrade the RAM of your DS 1513+? I think 4GB are possible.

On my DS1817+ with 16GB RAM and previously on a DS718+ with 10GB RAM GitLab was really fast. The main limiting point I see can be the internet connection. I have a 50/10 connection here, so from transfer from the NAS to external users/clients has only 10MBit – sometimes this feels quite slow. I also helped setting up a DiskStation with 8GB RAM and GitLab, which is hosted in a real data center – amazingly fast.

I can’t promise you anything, since it also strongly depends on how many users you have (in sense of parallel accesses) and which “Dedicated Ubuntu Server” you are comparing to. But I can tell you that I’m quite satisfied with my solution.

Thank you!

Yes I saw I can upgrade my NAS to 4GB and that was an option I was thinking about but from what it says on the GitLab site 4GB is still below the recommended, so I have kind of discounted it and it’s time to upgrade anyway.

So what I meant was that browsing even across the LAN on the GitLab site with the DS1513+ is slow, sometimes even timing-out- slow ie nothing to do with my Internet connection, so I just want to be sure there isn’t some sort of inherent slowness about installing GitLab in a docker on a Synology NAS in this way, provided I have enough memory, but your answer has reassured me.

I of course take your point about parallel accesses etc, for now it would be pretty much just me.

Thanks for the detailed instructions!

Yesterday I installed GitLab this way on a DS415+ (upgraded from 4 GB to 8GB). Only setting the external_url caused me some issues. After changing gitlab.rb the container started okay, but the URL did not respond. After removing the setting in gitlab.rb I tried the same setting via the GitLab Admin area (logged in as root). Updating “Settings” – “General” – “Visibility and access controls” – “Custom Git clone URL for HTTP(S)” everything worked okay. Just to make sure I tried modifying gitlab.rb a second time, but the effect was the same: no response from the URL I’m using to access GitLab until I removed the setting again.

Today I’ll try to get the HTTPS interface working (port 8443). I checked the container settings, but I still am not able to get a response from the HTTPS interface. I’ll try to configure GitLab with symlinks to my DSM LetsEncrypt certificate. If I succeed I’ll let you know how I did it.

Kind regard, Peter.

My fault: I used the external port number (8080) in the external_url in gitlab.rb. But inside the container GitLab is using port 80, so I should not specify the port number. The 8080 to 80 forwarding is done by docker, so invisible to GitLab.

Once this was solved, configuring SSL was not difficult. I just followed the GitLab Omnibus documentation and first copied the certificate chain and private key I’ve installed in DSM (using symlinks did not work):

# mkdir /volume1/gitlab/config/ssl

# cd /volume1/gitlab/config/ssl

# cp /usr/syno/etc/certificate/system/default/fullchain.pem example.com.crt

# cp /usr/syno/etc/certificate/system/default/privkey.pem example.com.key

The following configuration parameters were needed in gitlab.rb:

external_url “https://example.com”

nginx[‘ssl_certificate’] = “/etc/gitlab/ssl/example.com.crt”

nginx[‘ssl_certificate_key’] = “/etc/gitlab/ssl/example.com.key”

Just to make sure, I used SSH access to DSM to get inside the running container:

# docker ps

CONTAINER ID IMAGE …

57309b1735a7 gitlab/gitlab-ce:latest …

# docker exec -it 57309b1735a7 /bin/bash

root@gitlab-gitlab-ce1:/# gitlab-ctl reconfigure

After enabling HTTPS the HTTP port is no longer active, so in docker I removed the 8080 to 80 forwarding from the container settings.

Kind regards, Peter.

what about using the Gitlab package available in Synology package center? Is this tutorial a better way?

It’s quite outdated. For me, it was too old and lacking of shiny new features of GitLab, so I decided to try a different way.

I just added the article and linked a GitLab project which will provide some code for enabling automatic updates. I created a cronjob calling this upgrade script every night and it works like a charme since over one year now. Here is the code: https://gitlab.com/MatthiasLohr/omnibus-gitlab-management-scripts

We need to persist three directories of GitLab:

its not clear how to do this

/etc/gitlab – configuration directory

/var/opt/gitlab – user-generated content (repositories, database, …)

/var/log/gitlab – logfiles

Matthias,

I am a newbie and so my question is probably stupid, but please bear with me:

Why do I need docker to deploy gitlab on Synology? Can’t I do it directly, without docker?

I am not familiar with docker and I would rather have a conf file under apache that provides access to the gitlab subdomain. I am very familiar with Apache and I’m trying to avoid having to introduce another layer in this environment.

Is this even possible?

Hi,

short answer: Don’t do it.

Long answer: It might be theoretically possible. Certain NAS devices are running on an AMD64 compatible architecture, all GitLab components run on AMD64, so… yes, theoretically. Practically: DSM Linux does not have one of the widely spread packaging systems (deb, rpm). That means you have to install all the software components required by GitLab by hand (ruby, git, postgresql, elasticsearch, …). This is a very messy work and can additionally interfere with parts of the DSM system. This is a huge modification and you will most pobably brake some DSM stuff doing that. Additionally, DSM is using nginx, GitLab is using nginx. Yeah, you can put an Apache in between – but why should you? GitLab is so much more than only a HTTP server, so you need much much more knowledge than only Apache. Actually, Apache knowledge is not needed for GitLab at all. So it doesn’t help at all.

The thing is: When having so many things that might collide, like GitLab dependencies and the DSM system, that is what Virtual Machines are made for. Seperate environments, if one does, the other is still working. Disadvantage of VMs: Much overhead: Own OS, own kernel, etc. Linux Containers are the small variant of big VMs: From the application running within a container it feels like you’re alone on the system. From the outside, a container is just a task running with some special parameters. They share the hosts’ kernel, nothing else, but that’s much less overhead compared to a VM. Docker is one (of multiple) frontends using this Linux Container feature. Luckily, GitLab offers a full-features, ready-to-use package for running GitLab: The Omnibus Docker image. So instead of damaging your DSM, investing tens or hundreds of hours of getting many many different things running on your DSM: Invest a very short time in learning what Docker is. You don’t even have to understand how to use it if you want to follow my tutorial. Using this solution is faster, more stable, more safe, … than doing this by hand.

But of course, it’s your choice.

Hi Matthias,

Thank you very much for your direct and clear answer. For someone such as myself who is starting in this space, having clear-cut no-ambiguous advice is extremely helpful.

I will follow your recommendation, install GitLab inside Docker and then figure out how to make it work with the rest of my environment.

Thank you!

You’re welcome! Please let me know if it works or if you have and problems. 🙂

Hi Matthias,

I wanted to write back and let you know what happened in the hope that this might help someone else 🙂

I followed your advice, installed Docker and Gitlab and my Synology drive crashed :). The crash had nothing to do with your post of course, and I mention it here in case it can help someone else: Since my device (DS218+) came with 2 GB of RAM, I could not install docker, so I had previously upgraded the ram by adding an 8 gig crucial stick, but its timed at 1600 MHZ when in fact the recommended timing is 1866MHZ. I thought of nothing at first but as soon as docker and GitLab were active and the memory beyond 2 GIG was used, the NAS started to behave erratically, then crashed.

Fortunately, the folks at Synology answered my ticket right away and told me to remove the ram and reboot. I did so, and everything went back to normal.

So, I upgraded the ram with the correct speed and installed docker and git and I hit my second snag: how do I safely expose GitLab to the outside world?

After several dead-ends, I realized that the solution was staring me in the face!

Keep in mind that I am currently using a URL with a Synology DDNS. That’s important! I don’t know if my solution would work for folks with a different configuration.

1. Control Panel -> Securit -> Certificates

2. Created a wildcard Let’s Encrypt cert. Say my top domain is MyDomain.com (fictitious of course), so I keyed that as the main domain and then listed as alias gitlab.mydomain.com among other sub-domains.

3. Once the cert was created, I right-clicked on it and set it as default.

4. I went to the reverse proxy tab (control panel -> Application Portal -> Reverse Proxy) and setup the proxy information as follows:

Description: Gitlab

Source:

Protocol: HTTPS

Hostname: gitlab.mydomain.com

Port: 443

Enable HSTS: Checked

Enable HTTP/2: Checked

Enable Access Control: Unchecked

Destination:

Protocol: HTTP

Hostname: localhost

Port: port for Gitlab

On the custom header, I clicked on the drop-down arrow next to create and selected WebSocket.

I saved the configuration.

Lastly, I created a simple conf file for apache to redirect a http request to gitlab.mydomain.com to https://gitlab.mydomain.com since I couldn’t see how to configure the reverse proxy to do that. Here it is in case someone needs it:

SetEnv HOST gitlab.mydomain.com

ServerName gitlab.mydomain.com

ServerSignature Off

RewriteEngine On

# Rule to redirect http to https. I tried using the mod_alias

# redirect permanent but it would not take. This works.

RewriteCond %{HTTPS} off

RewriteRule (.*) https://%{HTTP_HOST}%{REQUEST_URI} [R=301,L]

Obviously, if someone reuses this conf file, they should change the domain name appropriately.

Here I am now, with gitlab up and running, and a happy syno drive 🙂

Thanks Matthias for your advice! I wouldn’t be here without your blog 🙂

Egan.

Glad that it is working now 🙂 And thanks for your udpate!

Hi Matthias,

Thanks for your post. I’m almost there but I’m missing one detail: What should I set the external_url to be?

My setup is pretty straightforward:

1. https://git.mycompany.com is the external facing URL accessible from the internet.

2. http://localhost:9090 which is mapped to https://git.mycompany.com via reverse proxy.

3. Lastly, in the gitlab container ports setup, I mapped the Synology port 9090 to the GitLab container port 80.

But I’m stumped by the external_url bit. Do I set it to

1. https://git.mycompany.com? That strikes as odd since the reverse proxy will substitute http://localhost:9090 for it

2. http://localhost? But that’s confusing too because, from the container standpoint http://localhost should mean the container host, not the Synology host.

3. Something else? If so what should it be?

Thanks for any tip you might have here, Matthias!

external_url should be https://git.mycompany.com. You want to have externally used links (e.g. in outgoing emails, links in GitLab itsself like the cloning link etc) pointing to https://git.mycompany.com, not to localhost.

I see. Thanks, Matthias.

I keep getting the same error though after I configure gitlab and try to launch it:

–2020-05-09 01:15:17– (try: 4) http://localhost:8080/

Connecting to localhost|127.0.0.1|:8080… connected.

HTTP request sent, awaiting response… Read error (Connection reset by peer) in headers.

Retrying.

In the post before, you mentioned you’re using port 9090. Also possible is a problem with HTTP/HTTPS confusion. Decide where to terminate your HTTPS connection, in DSM or in GitLab (for me it’s done by DSM Reverse Proxy, GitLab only listens for HTTP connections).

You are right, Matthias, but actually I had a couple of errors, which I’m listing here in case they help someone else:

1. The error ‘HTTP request sent, awaiting response… Read error’ is an indication that the processor on the DS218+ is not fast enough. Basically, the connection is timing out. To fix it, I added the following line in gitlab.rb: unicorn[‘worker_timeout’] = 200

That solved my local connection issue, but–to your point in your comment–the remote connection was still broken. This took a bit more investigating, but here’s where I am now:

My setup:

————

1. External Url for GitLab: https://gitlab.Mycompany.com

2. A wildcard cert covers both gitlab.MyCompany.com AND http://www.Mycompany.com

3. https://gitlab.MyCompany.com is mapped by the reverse proxy to http://localhost:9090

Objective: set external_url in gitlab.rb to https://gitlab.MyCompany.com

Challenge: Setting external_url to https://gitlab.MyCompany.com and running gitlab-ctl reconfigure will trigger GitLab to connect to Let’s Encrypt and attempt to get a cert to cover gitlab.MyCompany.com. That attempt will fail because there is already a wildcard cert that covers that subsite.

Resolution (Partial, I think):

————————————–

Add the following directives to gitlab.rb:

1. In the Let’s Encrypt section add:

letsencrypt[‘enable’] = false

This will prevent the reconfiguration process from attempting to create the SSL certs. That’s good because the reconfiguration process succeeds, but it’s bad because GitLab complains that it can’t find the certs.

2. In the Nginx section add

nginx[‘listen_port’] = 80

nginx[‘listen_https’] = false

This seems to fix the problem, but I’m expecting other challenges down the road. For instance, the GitLab documentation mentions that I might have to add a set of headers to the reverse proxy. It also states that I might have to dupe the configurations I listed above for pages and other components of git.

So the current state is that I am up and running, and I am looking forward to the next challenge as I learn to use GitLab, docker, and Synology.

Hi Matthias

First of all, thank you for this tutorial. Super.

We use the Gitlab-Package from Synology, but the Version is too old and they don’t lokk like they will update it soon. So I whould like to switch to an own Installation.

Do you have experience with migration from the official Synology-Gitlab to an own Docker-Image?

I don’t want to loose all my configurations.

Kind regards

Markus

Hey,

sorry, never looked into that.

Best regards

Matthias

Hi Markus

I had the same criterias, I wanted to switch from the Synology Package to Standalone. I went the way of exporting every repo manually and imported it again on the new Gitlab. That was quite some manual work, but it worked without issues. AFAIK newer Gitlab version (I think I saw it on 13) allow to export all repos together.

An alternative for you might be to use the synology gitlab package from https://github.com/jboxberger/synology-gitlab . He packages the latest Gitlab releases into Synology packages which can be installed as an upgrade to the official 8and very outdated) Synology package. This worked for quite a while very well for me.

Ouh, that’s a nice project! Didn’t know about that. Downside here: Every upgrade requires manual steps. For my docker solution, I have a shell script/cronjob for auto upgrades.

Yes, the manual update is a pitty. You have to download a 900 MB file, then upload it to the Synology Package Center again. But if you already have the Synology Gitlab running, its far easier than setting Gitlab up yourself.

Your update script, does it anything special?

I use https://github.com/pyouroboros/ouroboros which updates the containers automatically. Also it sends me a Telegram so I get informed. Additionally I have a cron service which notifies me if one of my services is offline for >30 Minutes 🙂

That looks complicated 😀 I just use this: https://gitlab.com/MatthiasLohr/omnibus-gitlab-management-scripts. Works perfectly on DSM, including the cronjob for update-gitlab.sh.

Sounds interessting …. I’ll probably give it a try

Hi Matthias

Thank you for your step by step explanations!

I set it up successfully. Also I (manually) transfered all (20) my repos form the official Synology Gitlab to this Standalone one. It works well, but I am a bit surprised about the high CPU load during idle time. What are your experiences there? I have a DS218+ with 10 GB RAM. The Synology packages (separate container for Gitlab, PostgreSQL, Redis) only take together some percent of the CPU in idle., whereas the official image Gitlab container takes 10% or more continuously, even after days.

Uhm, interesting. I’m also experiencing the same (DS 1817+, 16GB RAM). I checked top output from within the container, gitlab-exporter seems to consume the most CPU time here. Can you confirm that? Meanwhile, I will take a deeper look…

Looking on the Synology Docker Overview, I see following CPU load (no usage of Gitlab):

Synology Gitlab: /var/log/gitlab/gitlab-rails/production.log /var/log/gitlab/gitlab-rails/sidekiq_exporter.log /var/log/gitlab/nginx/gitlab_access.log /var/log/gitlab/gitlab-workhorse/current /var/log/gitlab/gitlab-rails/production_json.log /var/log/gitlab/gitlab-rails/production.log /var/log/gitlab/gitlab-rails/production_json.log /var/log/gitlab/gitlab-rails/production.log /var/log/gitlab/puma/puma_stdout.log /var/log/gitlab/gitlab-rails/sidekiq_exporter.log /var/log/gitlab/gitlab-rails/production_json.log <==

(Re-post because parts of the message got somehow discarded)

Looking on the Synology Docker Overview, I see following CPU load (no usage of Gitlab):

Synology Gitlab: <2%

Synology PostgreSQL: 0%

Synology Redis: 0.5%

Gitlab (separate Docker installation, separate DB but same repos): 14%

Looking on the internals, I also see that the exporter takes most of it:

puma: 7.25 %

sidekiq: 2.35%

postgres: 1.25%

Also I noticed, that I have a lot of log messages, almost every second. If you want, I can provide you a log separately, your commenting system somehow broke it.

Sorry for that. If you want, just send it to my contact email address (imprint).

However, I disabled prometheus in my gitlab.rb and the CPU usage decreased to ~6%. Still a lot for idling, but less than 10%. Hence, might be worth for another look.

Thank for the suggestion!

I now also disabled it.

Further more, I enabled some log configs and set them to warning level:

registry[‘log_level’] = “warn”

gitlab_shell[‘log_level’] = ‘WARN’

gitaly[‘logging_level’] = “warn”

Not sure if this helps, I still see log entries every few seconds.

How ever, after some hours, the CPU load went down to 3.65%, which is almost as low as the Synology Gitlab. (NB: This is Gitlab 13, where as my Synology Gitlab is still on 12, so not fully comparable).

I’m up and running without too much trouble thanks to this tutorial. Thanks

Hi, i laso wanted gitlap a try on my Synology with docker. The Installation and configuration seems preety much straight forward.

But when i start the container, it always fails with an “unexepected stopp” message.

Anyone else running onto the same issue? Or any ideas?

Can you please provide some logs? Otherwise it’s quite hard do say anything…

In the meantime i delete the container and vreated it new. And this time it worked like expected.

Thanks for reaching me out

Hello Matthias,

I was able to figure out the issue but couldn’t respond to my post (it hadn’t shown yet). The issue was the DNS resolver and in this particular case pi.hole was blocking access. I updated my settings and can now access the page however chrome now complains about the cert being wrong. I had followed someone’s instructions above about certs that I’ll need to revert or fix it so it allows https. Not sure, but I’m on a path to getting this up and running correctly.

Thank you for the instructions and response!

Hello! I Went through your steps above and everything seems to be working except for the external_url step; I cannot access the external URL. I simply did external_url “http://internal-gitlab.dev”

I can ssh into gitlab with `ssh -T git@ -p 7999` and get the right response. If I go through the steps to init a repo, remote add, and finally do a git pull, it is not able to resolve the domain.

$ git pull

ssh: Could not resolve hostname internal-gitlab: Name or service not known

fatal: Could not read from remote repository.

For reference, the git config is referencing the url as

url = git@internal-gitlab:agitlabuser/testproject.git

As far as I can tell I followed your steps exactly, ports and all. Are there any other synology, network (router, etc), or local machine settings I may need to change to be able to access the url locally? I have no intention of accessing the repo outside my home network, so I don’t think I need to open anything externally either.

I’ve done a few searches an even tried PETER HOOGENDIJK solution above, although not exact same issue, to no avail.

Hopefully you have a moment to help me out. Thanks!

If I understand you correctly you want to use it only internally, in your private/home network, right? In this case, you have to ensure that the hostname used for your GitLab instance is known to all clients, e.g. by adding it to your local DNS resolver or adding it to the hosts files of all clients in your network. The message “Could not resolve hostname internal-gitlab: Name or service not known” is not connected to GitLab/DSM at all, this is the problem of unresolvable DNS names only.

Ok. Now that I have GitLab running on my DSM then what would be the next stesp? Would you have a post on Creating a first CI/CD? Or should I follow just a regular GitLab CI/CD but substitute their docs with instead of pointing to the Gitlab server in the cloud then just add my on-prem version?>

Next step is simple: Just use it like you would use GitLab.com (including CI/CD). The only difference to other tutorials might be the hostname and maybe ports (http, https and git+ssh ports), depending on your configuration. For example, if you configure a GitLab runner, it will ask you for the URL of the instance you want to use for the runner.

Hi Matthias ! thanks for your tutorial, it was very helpfull.

i have a question, if you could help me, it would be nice

How to use ssh port 7999 ? I’m using gitbash and “git push” command was automatically sent on port 22.

I try to use “.ssh/config” file but is there another solution?

I guess you did not set gitlab_rails[‘gitlab_shell_ssh_port’] = 7999 in your gitlab.rb file. Don’t forget to run gitlab-ctl reconfigure after changing.

Thank you for your response. I found the problem. I disabled the ssh service on the synology NAS. I open the 22 port on the firewall, but could I disable ssh service for DSM ?

I found the solution, thank you for your tutorial.

I set gitlab_rails[‘gitlab_shell_ssh_port’] = 7999.

Open port 7999 on synology firewall

Forwarding on my router all TCP request on port 22 to my synology:7999.

Thanks for the nice tutorial.

I got stuck in “external access”. It works for me using the synology reverse proxy, so that I can access to my gilab instance using giltlab.mydomain.synology.me, however, I cannot make the git clone working with ssh.

Any idea? Thanks in advance

I mean, i cannot make git to work with the domain: the git@gitlab.mydomain.synology.me

well, i just forgot to forward in the router the specific port to the local IP of the synology. docker and the reverse proxy will do the rest

Thank you! The magic word for me was “root”-user. I tried admin, administrator, my email adress, users from synology accounts, nothing has worked! I tried to looking for the main user on google, but nothing found!

I have tried to install GitLab more than 10 times on synology bevor… thank you, thank you!!!

Hi,

thank you for this tutorial. Everything works but I can’t get email’s to new users (although I configured it as a closed gitlab, but when signing a user for myself the start password is transfered via mail). I used this tutorial from gitlab: https://docs.gitlab.com/omnibus/settings/smtp.html but I can’t find the problem:

root@gitlab-ce:/# gitlab-rails console -e production

:smtp

——————————————————————————–

Ruby: ruby 2.7.2p137 (2020-10-01 revision 5445e04352) [x86_64-linux]

GitLab: 14.0.5 (25fc1060aff) FOSS

GitLab Shell: 13.19.0

PostgreSQL: 12.6

——————————————————————————–

ActionMailer::Base.delivery_method

Loading production environment (Rails 6.1.3.2)

irb(main):001:0> :smtp

=> :smtp

irb(main):002:0> ActionMailer::Base.delivery_method

=> :smtp

irb(main):003:0> Notify.test_email(‘mail@xxx.net’, ‘Hello World’, ‘This is a test message’).deliv

er_now

Delivered mail 60f363e07db9f_7d05a8c7669b@gitlab-ce.mail (60084.5ms)

Traceback (most recent call last):

1: from (irb):3

Net::ReadTimeout (Net::ReadTimeout)

irb(main):004:0>

Thank you.

Hi, not sure about the issue, therefore just guessing: It looks like (“Net::ReadTimeout”) GitLab is not able to connect to the mail server. Assuming that you configured everything correctly, it could be related to internal networking. GitLab is running in a Docker container. If you configured the DSM firewall to block certain things, you need to allow GitLab, i.e., connections from Docker, to pass your firewall. I did this by adding a firewall rule for 172.16.0.0/12.

Hi. Problem was a wrong smtp port for my mail provider. One day later I found mails in my spam folder. The firewall on my synology was not the problem – sorry, that I didn’t mention that (I disabled the firewall for testing reasons). Thank you.

Synology with DSM7, installed docker, downloaded `gitlab-ce` image, chose `latest` tag, everything worked fine. Opened browser, pointed it at :, got the gitlab login page. Username root and password 5iveL!fe does not work. Any idea how to login? I haven’t changed any configuration files. I can access the containers host terminal with root user, but no idea how to login via web interface…. I’ve tried admin@local.host, admin and various other things from google searches, nothing works.

I wrote this article in 2019, since then Omnibus GitLab might (significantly) have changed. Did you check the official GitLab documentation at https://docs.gitlab.com/ee/install/docker.html?

I looked everywhere, then I found this page:

https://docs.gitlab.com/ee/security/reset_user_password.html

Opening the containers terminal window via docker, I executed the command `sudo gitlab-rake “gitlab:password:reset[root]”` to set a new root password, then I was able to login using `admin` and the new password. That was a seriously huge waste of time for something so simple!!! Why is the default password not what it states in the docs!? That’s really frustrating!

The current documentation (https://docs.gitlab.com/ee/install/docker.html#install-gitlab-using-docker-engine) states, that the initial password can be found using `sudo docker exec -it gitlab grep ‘Password:’ /etc/gitlab/initial_root_password`.

Just a reminder to whoever copies this command that Password should be wrapped with single quotes. When you copy from the browser, they are changed to a different symbol.

Hi. I know this tutorial might be a bit outdated in 2022. But i followed it. I got the docker-container + access under :8080 working. My problem is, that i cant pull, nor push. Added SSH keys to gitlab instance + forewarded “7999” on my router + Synology firewall. Added it to gitlab.rb as described above. Port is reachable (tested via telnet -p 7999).

When i do a “git pull” i get

“ssh: Could not resolve hostname gitlab-gitlab-ce1: Temporary failure in name resolution

fatal: Konnte nicht vom Remote-Repository lesen.

Bitte stellen Sie sicher, dass die korrekten Zugriffsberechtigungen bestehen

und das Repository existiert.

”

Found online this failure was a gitlab bug 5 years ago.

Any ideas?

Hi Matthias,

Thanks for this post! Got me on the right track for setting up GITLAB on the latest DSM 7.1.

O you have any step-by-step tutorials for getting your https://gitlab.com/MatthiasLohr/omnibus-gitlab-management-scripts update scrip to work on DSM 7.1?

Thanks!

Thanks for reaching out! I don’t have a tutorial here, but if you have problems others might have that too – so I would suggest that you open an issue in that repository and we will try to figure out where you have the problem and which part is not sufficiently documented yet.

Best regards

Matthias

Hey Matthias,

Thanks for the tutorial. I have freshly set up Gitlab. But the start page is not the one where you can set the root user’s password, it’s the normal login page where you can either login or register. Did I do something wrong? How can I set the PW for the root user?

Regards,

Pepe

Not sure about how I did the initial password. If I remember correctly, i was asked to enter a password… however, just follow the official docs: https://docs.gitlab.com/ee/security/reset_user_password.html

@Pepe,

They made the change to increase out of the box security, by eliminating the chance of an instance being taken over before a root password is set.

You can find the password here after your initial install:

/etc/gitlab/initial_root_password

Just use the share on your Syno to pick up the file and read it or the syno filestation

Src: https://forum.gitlab.com/t/how-to-login-for-the-first-time-local-install-with-docker-image/55297/7

@Matthias,

Thank you for this awesome tutorial 🙂 helped me a lot to get this quickly setup.

Wes

Hi, i am facing a problem and haven’t found the reason until now!

I deploy a gitlab on my NAS through docker, all thing’s going on but Gitlab Pages.

Pushing the blog(hexo),though .yml can product a static pages, but when clickd the page’s URL

it turn on a new page with 404……

Actually, I do not have pages running with my GitLab.

Did you check https://docs.gitlab.com/ee/administration/pages/#the-gitlab-pages-daemon? How did you set up pages on your NAS?

Set up with the giltlab its’self. reconfiged the gitlab.rb cause the gitlab pages function diable default

I have check the https://docs.gitlab.com/ee/administration/pages/#the-gitlab-pages-daemon now, but it is too long to get the important, so i am searching the help, thanks a lot!