Kubernetes Cluster on Hetzner Bare Metal Servers

If you want to run your own Kubernetes Cluster, you have plenty of possibilities: You can set up a single node cluster using minikube locally or on a remote machine. You can also set up a multi node cluster on VPS or using managed cloud providers such as AWS or GCE. Alternatively, you can use hardware, e.g. Raspberry Pis or bare metal servers. However, without the functionality provided by a managed cloud provider, it is difficult to take full advantage of the complete high availability capabilities of Kubernetes. We have tried – and present here the instructions for a highly available Kubernetes cluster on Hetzner bare metal servers.

Why Bare Metal?

GCE and AWS are very expensive, especially when your cluster is growing. Raspberry Pis are cheap, but also quite limited regarding resources. Usual VPS providers lack support of High Availability, and a single node cluster is per definition a whole Single-Point-of-Failure – nice for testing, but not for production.

It is also possible to set up a Kubernetes cluster on bare metal. In this case, implementation of High Availability depends on the features the colocation provider is offering. Somehow, you have to create a machine-independend load balancer, which redirects traffic to working nodes and ignores broken nodes.

Dorian Cantzen (extrument.com) and I have taken up that challenge. In this article, we will describe the steps to set up a production ready Kubernetes cluster on Hetzner bare metal servers using the Hetzner vSwitch feature.

Important notice: Neither the author of this article (Matthias Lohr) nor Dorian Cantzen are affiliated with Hetzner nor paid for writing this article. This article is a technical report, initiated by Matthias Lohr, summarizing the findings when trying to find a feasible solution for a highly available Kubernetes cluster on bare metal machines. We found Hetzner as part of one possible solution, most probably there are more eligible providers out there for a similar solution.

The High Availability Challenge

The basic goal of a server cluster is reliability in terms of high availability and fault-tolerance. If one component of a cluster fails, cluster logic will automatically use another component for the task.

Generally, you can devide cluster components in three categories: computational resources, storage resources and networking. The core component of Kubernetes is a scheduler for computational loads (Pods), which provide services such as web portals etc. Ceph clusters or Kubernetes-based solutions like Rook provide redundant storage. High Availability for the remaining part, the networking, requires special support by the colocation provider. Usually, when using mainstream hosters like Hetzner, a server has a single NIC with a single IP (ok, one IPv4 and one IPv6) address. Typically, a DNS record points to one or multiple IP addresses. However, when pointing to multiple IPs, and the server behind this IP is not available, the user will get connection errors. So, DNS can’t help to provide a solution here. What we need is an IP address which can be shared between multiple servers.

We found that using Hetzner vSwitches, it is possible to route IPs or IP subnets into a VLAN (IEEE 802.1Q) where each server can be connected. It’s then up to the servers to decide which one should reply to incoming traffic for this/these IP(s). IP migrations can be done completely within the cluster servers, without notifying an external API.

Setting up a Kubernetes Cluster on Hetzner Bare Metal Servers

Create VLAN (Hetzner vSwitch)

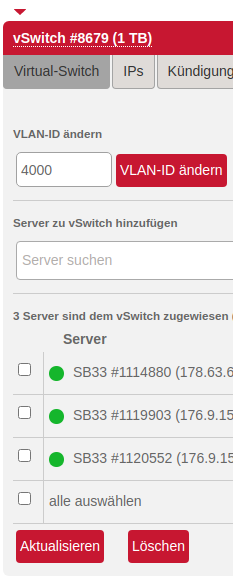

First, we have to create the VLAN, which connects the servers. You can do that in the Hetzner vSwitch configuration area:

After the vSwitch is created, you have to assign the servers you want to add to your Kubernetes cluster to the vSwitch:

On the IPs tab, you can order additional IPs or IP subnets, which are routed to the vSwitch and therefore not assigned to a single server. We will use MetalLB to manage these IP address(es).

Server Setup

Now, you should set up the servers with your favorite OS capable of running Kubernetes. After the standard setup has finished, we need to configure the VLAN and the additional IPs. According to the official Hetzner documentation, you have to create a virtual network interface with VLAN taggings. But since you want to use the IPs within the Kubernetes cluster, you have to add some additional ip rules.

Below you will find an example for a working netplan configuration. This configuration uses 10.233.255.0/24 as internal network range for cluster internal communication and 321.321.321.32/28 as subnet assigned to the vSwitch. 10.233.0.0/18 is the default service IP range used by kubespray, 10.233.64.0/18 the according default Pod IP range.

network:

version: 2

vlans:

# Configure vSwitch public

enp4s0.4000:

id: 4000

link: enp4s0

mtu: 1400

addresses:

- 10.233.255.1/24

routes:

- to: 0.0.0.0/0

via: 321.321.321.33

table: 1

on-link: true

routing-policy:

- from: 321.321.321.32/28

to: 10.233.0.0/18

table: 254

priority: 0

- from: 321.321.321.32/28

to: 10.233.64.0/18

table: 254

priority: 0

- from: 321.321.321.32/28

table: 1

priority: 10

- to: 321.321.321.32/28

table: 1

priority: 10Alternatively, you can use our Hetzner vSwitch ansible role which we developed during our experiments.

Test if the VLAN works properly by try to ping all nodes using their private IP addresses (10.233.255.1, 10.233.255.2, …).

Setup Kubernetes

Now, since the networking stuff is up and running, you are ready to install Kubernetes. We did that using Ansible/kubespray, which offers a quite convenient and production ready solution for managing bare metal Kubernetes clusters. Use the server’s internal IP addresses in your inventory.

# example inventory

[all]

node1 ip=10.233.255.1 etcd_member_name=etcd1

node2 ip=10.233.255.2 etcd_member_name=etcd2

node3 ip=10.233.255.3 etcd_member_name=etcd3Before installing the network plugin, ensure that you set the right MTU for your Kubernetes networking plugin. Hetzner vSwitch interfaces have a MTU of 1400. When using e.g. Calico, which has a 20 bytes overhead, you need to set the MTU for Calico to 1380.

Install and configure MetalLB to use the IP/subnet assigned to the Hetzner vSwitch. Please ensure, that you do not configure the whole subnet, but exclude the two first and the last IP address.

Example

subnet assigned: 321.321.321.32/28

IPs in subnet: 321.321.321.32 – 321.321.321.47

subnet address (not usable): 321.321.321.32

subnet gateway address (used by Hetzner): 321.321.321.33

subnet broadcast address (not usable): 321.321.321.47

remaining usable IPs/MetalLB range: 321.321.321.34 – 321.321.321.46

That’s it! Now you can start using Kubernetes Services with type LoadBalancer and one of the usable IP addresses to get traffic into your cluster. MetalLB will care about assigning these IP addresses to working nodes. If one node goes down, MetalLB will reassign the IP address to a working node.

The only bottleneck we didn’t figure out yet: Does Hetzner have a redundant (highly available) setup for the vSwitches…?

21 COMMENTS

Hey Matthias,

did you try the whole vswitch thing with a external subnet? I’m using cilium without kube-proxy and i can curl my vswitch external ip on the node but not from the internet…

Any ideas?

Max

Hi,

yes, external subnet directly linked to the vSwitch, not a single server. What you’re describing could have several reasons, my first bet would be a routing issue (packets not leaving using the vSwitch).

Best regards

Matthias

Yeah i’ve found the issue, cilium needs to know the device which the traffic comes in, so in my case i set devices: “vlan4000” in the cilium config.

Thanks!

I really apreciate this tutorial!!! simply amazing, in my case I was stuck sue to mtu value, the trick of put the mtu to 1380, works like a charm.

Thank you.

Hello Mathias, I’m following this guide and it’s amazing but I’m stuck after the last step, I configure my metalLB to use the new IP-ranges assigned to the vswitch but I’m still cannot access any of this ips from outside, How can I do? I review all the hetzner doc but I don’t think any clue

What exactly is “the last step” for you? What do you mean with “access” (Ping, HTTP, ssh, …; from where)? Are services of type LoadBalancer created? Do they have an assigned IP from your vSwitch subnet? Have have you used my ansible role for vswitch configuration? If no, how did you create the routes?

the metalLb configuration, it’s assigning the external Ip correctly to the nginx service I created to test it, I added your configuration rules and added to my servers netplan yaml only change it the public ip 321.321.321.32/28

enp3t0.4001:

id: 4001

link: enp3t0

mtu: 1400

addresses:

– 10.232.253.6/24

routes:

– to: 0.0.0.0/0

via: 18.35.140.185

table: 1

on-link: true

routing-policy:

– from: 18.35.140.184/29

to: 10.233.0.0/18

table: 254

priority: 0

– from: : 18.35.140.184/29

to: 10.233.64.0/18

table: 254

priority: 0

– from: : 18.35.140.184/29

table: 1

priority: 10

– to: : 18.35.140.184/29

table: 1

priority: 10

I assume it’s something with my netplan but I don’t know why did you see any wrong?

So far, it looks ok. What did you configure for MetalLB? Which range?

Ok here it’s my config.yaml for metalLB I follow the instructions on their documentation, do you see any wrong?

apiVersion: v1

kind: ConfigMap

metadata:

namespace: metallb-system

name: config

data:

config: |

address-pools:

– name: default

protocol: layer2

addresses:

– 18.35.140.186-18.35.140.190

Looks also good to me. Sorry, no idea just from looking at these configs. If you want to have me a look at your servers directly, feel free to send me an email.

Great Guide! We are actually also planning on basing our new cluster architecture on this system. I’m currently working on a Proof of concept cluster, but have run into one pretty annoying issue regarding the Calico CNI. Maybe you’ve come across the same thing and already have a solution for that 🙂

After following your Guide, our hosts successfully recieve packets (confirmed by traceroute) of a public subnet IP which is allocated to a service by MetalLB.

So this already confirms that servers are answering to Packets of the vSwitch and the general system works.

But now I’ve run into the problem that Calico somehow does not connect the incoming packets to the actual service. Connections just time out.

The cluster is running a vanilla Calico install, thus we didn’t change the pod CIDR (192.168.0.0/16), nor did we change the default service CIDR (10.96.0.0/12).

This is our netplan yaml:

network:

version: 2

renderer: networkd

vlans:

vlan.4001:

id: 4001

link: enp3s0

mtu: 1400

addresses:

– 10.0.1.2/24

routes:

– to: 0.0.0.0/0

via: xxx.xxx.xxx.217

table: 1

on-link: true

routing-policy:

– from: xxx.xxx.xxx.216/29

to: 10.96.0.0/12

table: 254

priority: 0

– from: xxx.xxx.xxx.216/29

to: 192.168.0.0/16

table: 254

priority: 0

– from: xxx.xxx.xxx.216/29

table: 1

priority: 10

– to: xxx.xxx.xxx.216/29

table: 1

priority: 10

Since we’re not using any custom networking mode, the Calico MTU was set to 1380 (because of the 20 bytes overhead described in their docs) in the calico-config ConfigMap.

I rolled out new deployments of the calico pods and restarted the hosts, such that the settings are applied.

So this is the current state of the system. I’m not sure where to proceed from here on. Is there anything that I can do to debug this problem and get more information? Do I have to tell calico that it should accept the public subnet IPs? Is there something else I may need to adjust in the iptables? Are there kernel modules that you may know of, that are needed but are often not enabled by default (overlay and br_netfilter are enabled)?

I would be very excited if you could help me out here. Maybe others will also face this problem (that’s why I tried to keep everything as vanilla as possible)

Some more metadata:

OS: Ubuntu 20.10 kernel 5.8.0

Host: Standard Hetzner ServerBroker host machine.

LoadBalancer: MetalLB 0.9.6

MetalLB Address Range: xxx.xxx.xxx.218 – xxx.xxx.xxx.222

vSwitch Public Subnet: xxx.xxx.xxx.216/29

Host count: 3

Host addresses: 10.0.1.2/24 => host1: 10.0.1.2; host2: 10.0.1.3; host4: 10.0.1.4

Kubernetes installation: kubeadm

CNI: Calico 3.18.1

Hi, not sure if it is the issue, but newer versions from calico modify the ip rules in an 90 seconds interval, which will overwrite the netplan configuration. Anyways, I’m not using a public subnet anymore, since Hetzner is offering cloud load balancers now.

FIY for anybody wanting to use vSwitches for HA. I’ve had a talk with Hetzner technicians and figured out, that they actualy don’t have any reduncancy for their vSwitches whatsoever. This means that while you may not need to care about fixing stuff when a vSwitch goes down, you’ll still have downtime for as long as it takes Hetzner to fix the problem. Just something to think about when going this direction.

That’s right, but you have also the possibility to order your own private network (even with 10G support). And no, I’m (unfortunately) not getting paid for informing about this 😉

Does anybody have any experience with the stability of the vSwitches at Hetzner? The lack of redundancy pointed out here does not indicate that this is suitable for a HA production environment, but has anyone tried it out for a while and have anything to share regarding the stability?

Great article by the way!

For now, I have not experienced any major issues with the vSwitch. During announced maintenance, there was a small amount of packet lost, but recovered quickly. However, you can still order your own private 10G network between your servers with hetzner – if you want more stability. So you could use 10G as main internal network and vSwitch as a backup for example, which gives you quite a redundancy. Alternatively, it would of course also be possible to work on your own rack with own switches (LACP, multiple NICs per server etc) – then you have full redundancy (ok, not really, you need backup computing center.. 😀 …)

I am also interested in knowing what is the overall experience with Hetzner vSwitch. My experience with Hetzner vSwitch is miserable, but I am not sure it’s just me missing something. I have a Proxmox VE cluster running on a few bare metal servers at Hetzner, and have a bunch of VMs connected to vSwitch. The VMs can talk to each other over vSwitch, but sometime they just stop working. Sometime disconnecting the servers from the vSwitches and re-connecting again (as advised by Hetzner support ) helps, sometime doesn’t.

To guarantee really HA I would suggest to put all your machines in a single rack, order a physical 10GB switch and 10GB NIC for each cluster node, so you have a separated physical local network to the cluster, isolated from the internet exposed network.

I agree, that would help to have HA within the cluster, but not HA for external availability, e.g., when the rack’s switch fails (already experienced). So, there are pros and cons for putting them in a single rack. If I’m wrong with this, e.g., if there are multiple uplink switches, please let me know.

If one is interested in using a public subnet along with the default public-ip see

https://serverfault.com/questions/1085433/nat-packet-goes-out-on-wrong-gateway

Hi there,

i had the same issues with the latest calico installation.

Even changing Felix tables configuration didn’t helped.

After switching calico to flannel it worked without any issues.

Just make sure you change again the MTU back to 1400.

Best regards